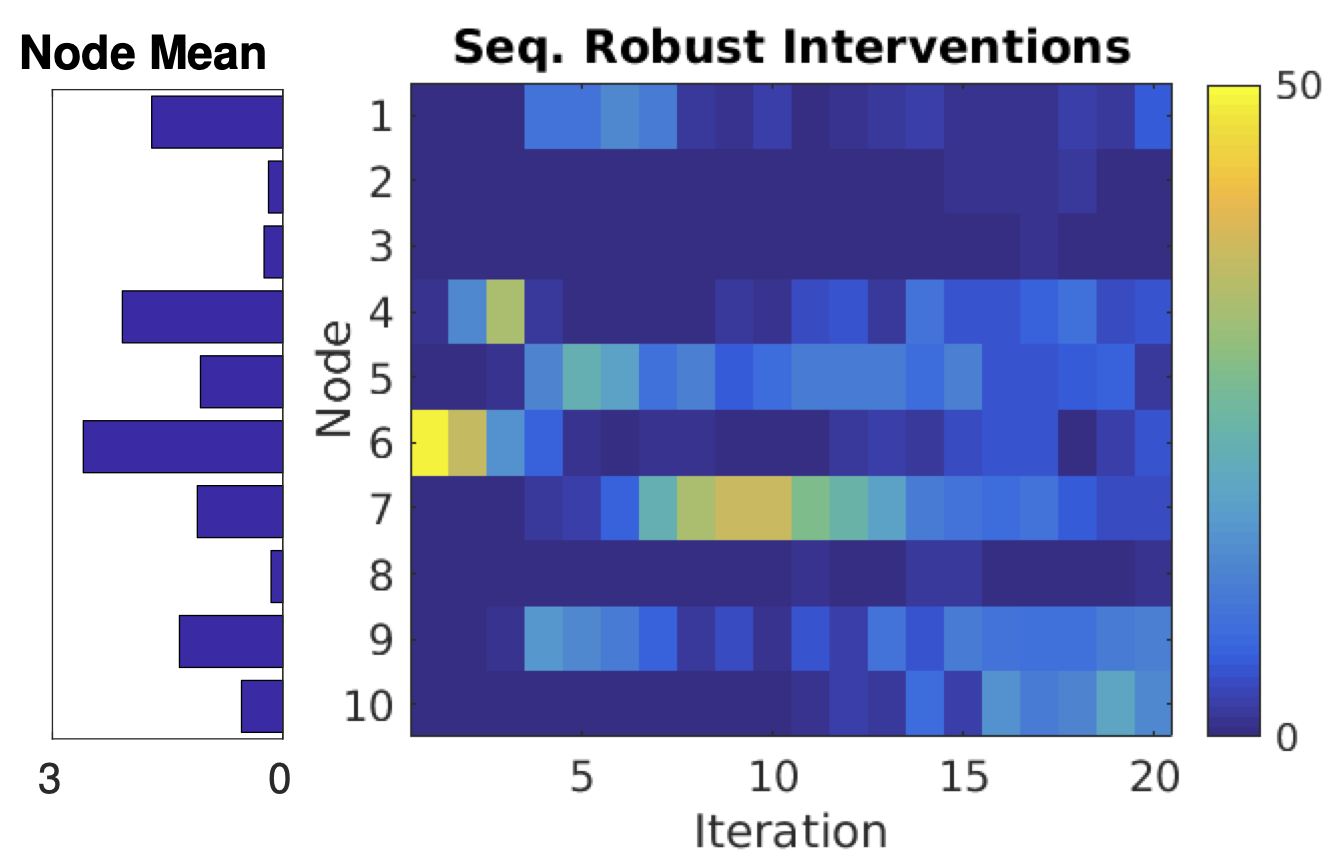

Integrates upper/lower MI bounds to formulate Bayesian Optimal Experiment Design (BOED) with successive refinement/tightening via adaptive allocation of computation to achieve a desired performance guarantee. Achieves the same guarantee as existing methods with fewer evaluations of the costly MI reward.

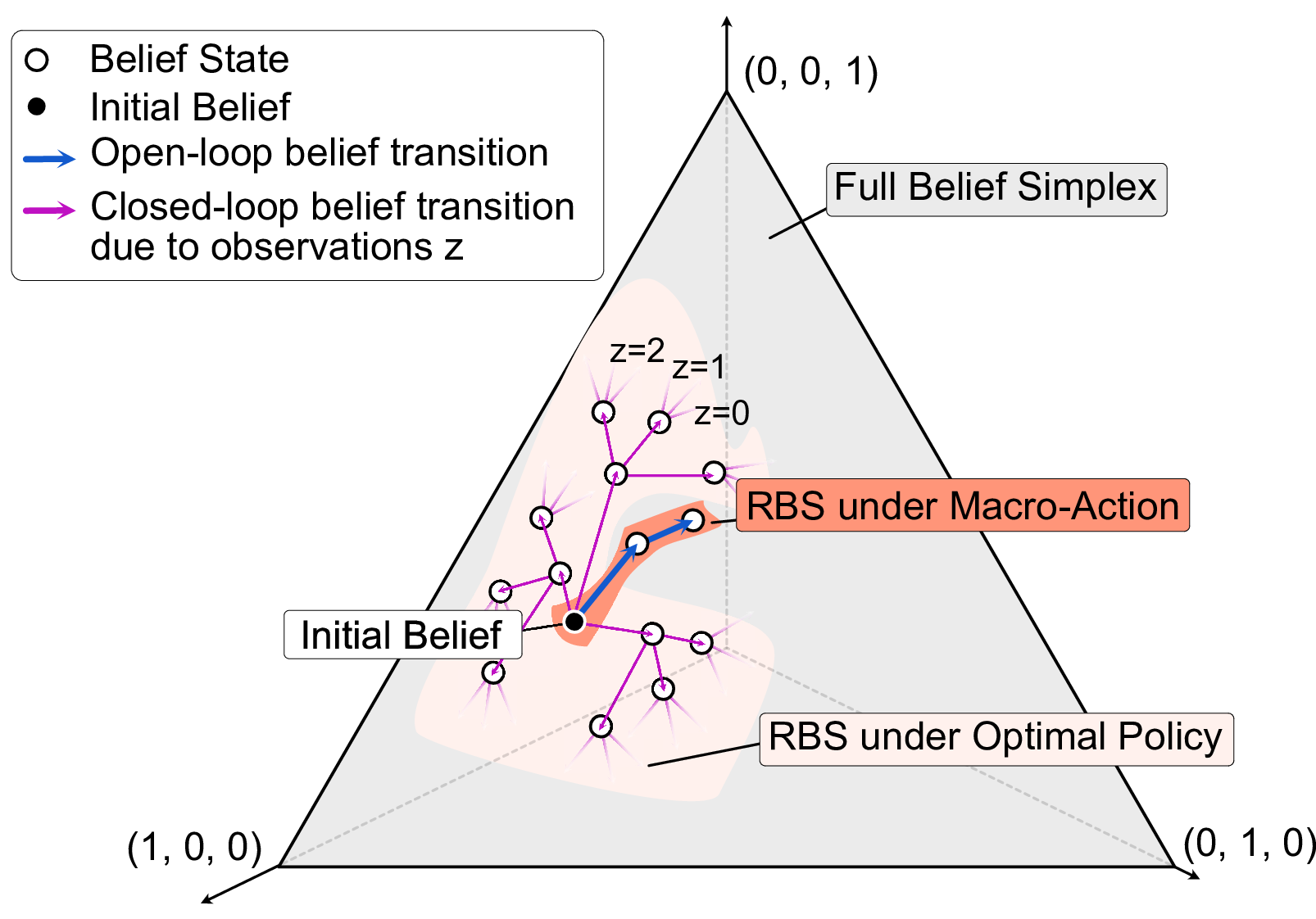

This work introduces macro-action discovery using value-of-information (VoI) for robust and efficient planning in partially observable Markov decision processes (POMDPs). POMDPs are a powerful framework for planning under uncertainty. Previous approaches have used high-level macro-actions within POMDP policies to reduce planning complexity, but are often heuristic and rarely come with performance guarantees. Here, we present a method for extracting belief-dependent, variable-length macro-actions directly from a low-level POMDP model.

Articulated motion analysis methods often rely on strong prior

knowledge, e.g. as applied to human motion. However, the world

contains a variety of articulating objects - mammals, insects,

mechanized structures - with parts varying number and

configuration. We develop a Bayesian nonparametric model applicable to

such objects combined with coupled rigid-body motion dynamics. We

derive an efficient Gibbs sampling procedure applicable to differing

observation sequence types without need for markers or learned body

models.

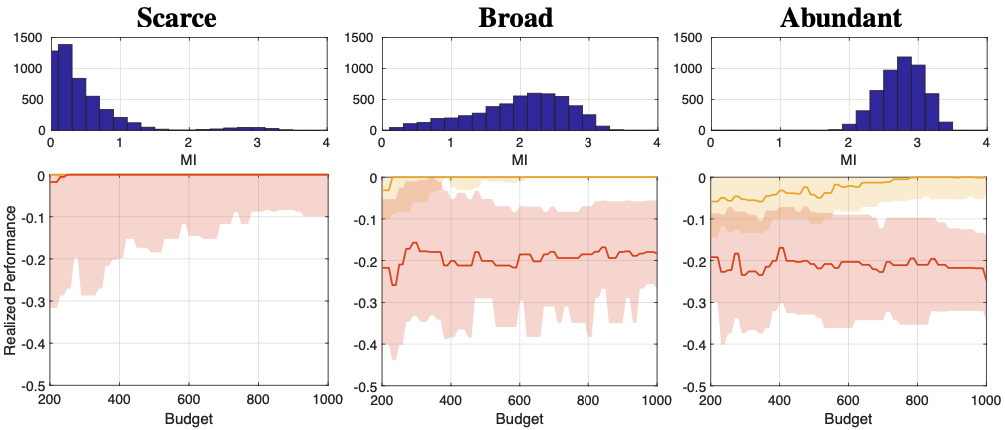

Mutual information (MI) is often used a reward in information-driven

Bayesian optimal experiment design (BOED). MI generally lacks a closed

form necessitating estimators. In applications where the cost of plan

execution is expensive, one desires planning estimates which admit

theoretical guarantees. Robust M-estimators yield bounds on

absolute deviation of MI estimates. We propose an integrated

inference-and-planning algorithm leveraging sample-reuse at each

stage. Empirical results show improvement over recent methods for

inference of gene-regulatory networks.

Presents a generative probabilistic model of temporally consistent

superpixels where, in contrast to other methods, a

temporal superpixel in one frame tracks the same part of an

underlying object in subsequent frames. We use a bilateral Gaussian process model of flow

between frames to propagate superpixels in an online fashion. We

present four new metrics to measure performance of a temporal

superpixel representation and find that our method outperforms

supervoxel methods.