Summary: Following Ren and Malik, superpixels became ubiquitous as a preprocessing step in many vision systems (e.g. Felzenszwalb, Grundmann). Superpixels comprise an intermediate-level representation that preserves significant image structure in a compact representation that is orders of magnitude smaller than a pixel-based representation. Separately, work on motion analysis ranging from Lucas & Kanade and optical flow to structure from motion considered methods for establishing correspondences of image pixels or locations in consecutive frames. Inspired bya , this work developed a representation for videos that parallels the superpixel representation in images. We call these new elementary components, temporal superpixels (or TSPs for short).

Related SLI papers: Though different in nature, prior work on topologically constrained shape sampling led to thinking about TSP. Formulation and implementation of TSP involved birth/death sampling influencing later work on parallel sampling methods for Dirichlet Processes and Hierarchical Dirichlet Processes. GP-based flow models were used for layered tracking and influenced the use of GPs for Bayesian intrinsic image estimation.

Figures

|

|

|

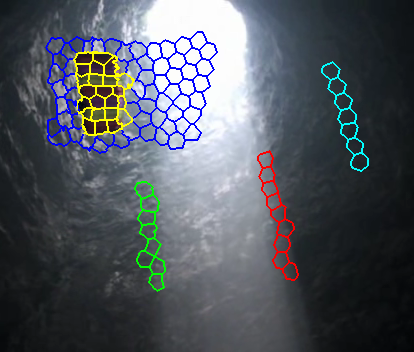

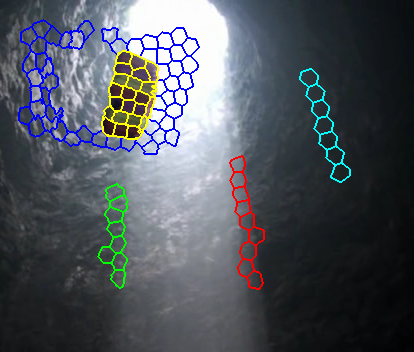

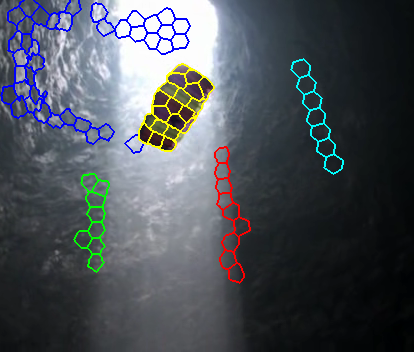

Example of TSPs. Note that the same TSPs track the same points across frames on the parachute and rock. We show a subset of TSPs, though each frame is entirely segmented. |

|

|

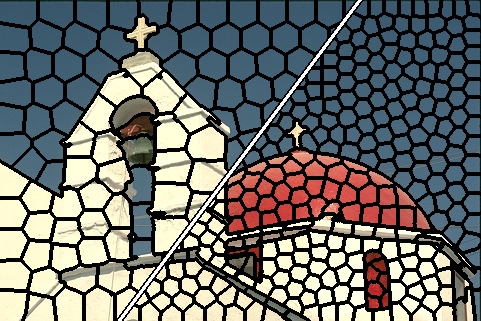

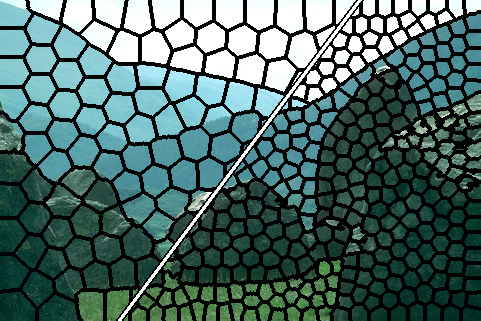

| Example superpixels at two granularities of images from the Berkeley Segmentation Dataset. Example superpixels at two granularities of images from the Berkeley Segmentation Dataset. Example superpixels at two granularities of images from the Berkeley Segmentation Dataset. Example superpixels at two granularities of images from the Berkeley Segmentation Dataset. | |